Numbers don’t lie. This is how the principle of A/B tests can be described in just a few words. The data provided by the target group themselves are observed instead of making speculations and forecasts about their behaviour. The principle is quite simple: Two variants of a website are tested online. A/B testing measures the different conversion rates. The better version wins – and is continuously optimised with further A/B tests, if necessary.

What is A/B testing?

A/B tests are random experiments performed on a web page. They measure the success of two or more different versions of a website, app or advertising campaign. As a result, statistically significant data is used to show which variation is better at achieving a given goal.

The users don’t know that they are participating in an experiment. The website operator also leaves the selection of the variant to chance – which makes the results even more significant and credible. This procedure is also known as a double-blind test in medicine as the effectiveness of a drug is assessed by administering a placebo to some of the patients (the control group), while the others are given the actual drug to be tested. Both parties involved (the administering doctor and the patient) do not know which drug the patient has been given. But this ignorance is a prerequisite for the test to work.

A/B testing or split testing?

One often reads about split tests or split testing on the Internet, but which one is the correct term? A/B test or split test? The difference is as follows:

Both terms are often used as synonyms. For amateurs, this difference is hardly of importance. However, split testing can have adverse SEO effects. There is a possibility that Google classifies two almost identical pages as duplicate content. Therefore, this variant should only be used if the page to be tested shows significant differences. The other option would be to set a canonical tag. This way, the test page would point to the original page and not be indexed by Google and it will also not be perceived as duplicate content.

It is crucial that the results can be validated and that users are actually presented with two variants of a website on which at least one element is different..

A/B testing – a simple principle

A/B tests are based on a very simple principle: to find out which variation works better. An example:

This is, of course, a very simple example. The bigger your website is, the more complex it can be to set up an A/B test.

Measurable data instead of speculation

A/B testing is revolutionising the marketing and strategies of start-ups. Whereas it was previously necessary to draw up detailed plans for a project before its launch, it is now possible to start a project online immediately and test which variants appeal to readers the most during the start-up phase. A/B testing, therefore, is a cost-saving strategy for start-ups.

A/B or split tests rely on measurable data instead of speculation or guesswork. But why is there a need for such tests in the first place? The answer is simple: Often campaigns – even if they were developed by professionals and based on market research data – ultimately prove to be ineffective.

A/B tests, on the other hand, allow people to continuously optimise ongoing marketing campaigns. A/B testing is, so to speak, market research in real-time. The development of campaigns responds flexibly to the reactions of the target group. Of course, insights from the science of advertising are not ignored. Instead, the specialist knowledge of experts is applied directly in a current campaign in order to respond to initial results. For example, you can test,

Aspects such as design and usability can also be tested through A/B tests.

Do you still need quality texts for your planned A/B tests?

The right content for your target group can be created easily and conveniently

What is A/B testing used for?

The range of applications for A/B tests is very extensive. For example, the statistical test is suitable for the following advertising measures or elements on websites:

When using A/B tests, it is crucial to set macro goals that mark the endpoint of the tests, especially in large projects. Once a predefined conversion rate is reached, the test can be brought to an end. However, it is also possible to run tests until the project comes to an end and then continuously use A/B tests as and when needed.

How do I create a good hypothesis?

The hypothesis is the foundation of an A/B test. Without a hypothesis, you are ultimately running a meaningless test. The text for a call-to-action button is used here as an example. This is how it’s done before you start the test:

Formulate the question:

Why do very few visitors click on the call-to-action button?

Formulate the hypothesis:

The visitors do not click on the call-to-action button because the request to click sounds too aggressive (“Click here!”).

Formulate the solution:

Visitors will click the call-to-action button more often if the request is done in a more friendly and defined way (“Do you want to know more?”).

Formulate a clear metric before running the test:

What goal do you want to achieve? Example: If the click rate increases from 5% to 10%, the new version of the call-to-action button has been more effective.

It is important to test each hypothesis individually and independently of other elements. As a rule of thumb: When testing a single element, the results are only significant if everything else is identical in both versions.

After performing this test and achieving the measurable results, you can then make further optimisations. Examples:

This way, your web page will be optimised gradually. Important: When running an A/B test, set a macro goal for each project to mark the end of the test.

What does significance mean?

At some point in every A/B test, the term “significance” is bound to come up. This word commonly means “being important or meaningful”. But in statistics, the term significance has a strictly defined meaning.

A result is statistically significant if it cannot be explained by chance.

A simple example:

In a sphere, there are 100 white and red balls. How many white and how many red balls are there? If you select only two balls, the result is certainly not statistically significant. However, if you select 50 balls, you can already make a well-founded statement about the probable colour composition of the balls, based on a reliable sample. The same is true for an A/B test. Only after a certain number of visits to your website can you make reasonably meaningful predictions about the accuracy of your content.

When is an A/B test significant?

The first results of a split test are determined. Variant A, for example, shows a conversion rate of 12 per cent, while variant B achieves only 11 per cent. 2000 visitors visited the web pages, 1000 were shown variant A, and the other 1000 visitors were presented with variant B. Is the result significant – i.e. meaningful? No, because the 1 per cent difference in conversion is down to chance.

A similar example:

Variant A: 10 visitors, 30% conversion

Variant B: 10 visitors, 50% conversion

Again, the result is not significant – despite the large difference in the conversion rate. With so few visitors, the result can also be explained as pure chance. However, if the conversion rate for variant A is 100% and 0% for variant B, there is a certain significance here despite the few visitors.

Rule of thumb:

A result will be more reliable the more visitors are counted and the higher the difference in the measured values.

And what if the experiment does not show any significant differences? This is also a result. Because in this case, the measurement shows that the element is not relevant for conversion. Further tests on this element can therefore be omitted.

The calculation of the statistical significance is based on the so-called Chi² test. The variables that are to be used for your A/B test would be as follow:

Visitors to the original variant

Visitors to the comparison variant

Conversions of the original variant

Conversions of the comparison variant

Total number of visitors

Total number of visitors with conversion

Total number of visitors without conversion

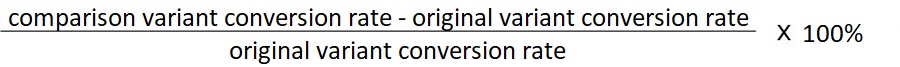

The value by which one variant performs better than the other is called the uplift and you can calculate it as follows:

To make sure that your A/B test gives the right results, you can use the following online calculators:

Even brief texts determine click-through rates.

Professional copywriters know what’s important.

A mathematical digression Understanding the online calculators

You can use these practical calculators to check whether your test results show significant differences. It is also possible to calculate the optimal duration of a test by determining the minimum sample size. To do this, you need to know the current conversion rate and average traffic on the testing page, as well as the number of test variants you plan to run.

Each sample automatically requires a probability to be calculated so that it can represent a certain population. This is where confidence intervals come into play. Many of the online calculators are also based on the principle of confidence and – as the name suggests – refer to trust or expectation. After all, you cannot expect to know everything about the population in the case of random samples. With the help of an estimation sample, however, trustworthy interval ranges can be defined from random sample results. For your parameters, this means that they are always located in an interval with upper and lower limits. These limits are formed by random variables. This interval, therefore, indicates a certain bandwidth within which the population is likely to move. Your confidence intervals are then derived from these interval estimates. The probability that a sample result lies within and with which it can beapplied to the population is called confidence level. With regard to your A/B test, the following fact is interesting: With a confidence level of below 95%, the difference between the original variant and the test variant is not statistically significant.

Let’s illustrate the whole thing with an example:

A hot dog stand in a busy town centre sells an average of 800 hot dogs a day. The standard deviation is 70 pieces, so that the results – according to a standard normal distribution – are in the range of ± 2 standard errors symmetrically around the mean value. The lower limit of the confidence interval is therefore at estimated value – standard error and the upper limit at estimated value + standard error. At 95%, the number of sausages sold is between 660 and 940 (800 – 2 * 70; 800 + 2 * 70). This means that the values are in the range between 660 and 940 with a probability of 95 per cent. In this case, the probability of error is 5 per cent. At a confidence level of 99 per cent, the sample results are in the range of ± 2.58 standard errors around the mean and the probability of error is, therefore, one per cent. As a result, the required sample size for a test is also correspondingly higher – as well as the recommended minimum time to complete the test.

If you want to give a forecast of how the hot dog seller will “perform” in the future, you should, for example, keep seasonal or temperature-related fluctuations in mind. Seasonal or temperature-related fluctuations are often the cause of statistical or even random errors, which – unlike systematic errors – must always be calculated and can be due to external influences.

Incidentally, it is common practice to identify random samples with a statistical error. This refers to the maximum deviation of the results based on a sample from the real values in the population.

In relation to your A/B test, this means: It gives you information about what the conversion rate is likely to be in an interval. The more data your sample collects, the more reliable your statement will be.

Tools forA/B tests

What are the best A/B testing tools to test theperformance of different versions? First and foremost, there is Google Analytics. This tool from the market leader, Google, contains numerous features for measuring conversion.

For example, Google AdSense can be used to measure the RPM (Revenue per Thousand Impressions, sales per 1000 visitors). This value is particularly important for placement of ads on a website.

Google Analytics offers an A/B-N model in which not only two, but up to 10 versions of a page can be tested for conversion. What’s the best way to run such a test?

Enter the destination or the measured value (here you can also define the percentage of traffic participating in the experiment).

Configure the test: select two pages that will be presented to the participants.

Insert the test code into the pages.

Start and check.

Google AdSense also uses the principle of A/B testing. Significant differences in the click rates of differently formulated small copy ads provide information about which formulations work best.

How do you run A/B tests?

A/B testing is a scientific process based on statistical findings. It is all about numbers – that is why it is particularly important to work accurately. A professional approach to A/B testing goes through the following stages:

Analyse the opportunities and potential of the website. Where are the problems?

Working out hypotheses. Example: Problem A is caused by B.

Possible solutions: Problem A can be solved by changing B.

In case of several problems: Resolve each one according to importance and deadline.

Run tests and measure results.

The various problems are gradually solved in order of importance.

The question of which problem needs a solution is mainly oriented towards the conversion. But which conversion is crucial for success? The answer lies in the goal of a website:

The results of A/B tests are always astounding. It is often the smallest improvements that turn out to be crucial for generating sales or conversion success.

A/B tests and artificial intelligence

Many A/B testing tools use artificial intelligence. But how does artificial intelligence (AI, Artificial or Machine Learning) work in A/B testing? The tool stores historical data, live data and best practices to make recommendations or even implement the optimisation process itself. Algorithms based on AI recognise recurring patterns, derive recommendations from them or control measures independently. Artificial intelligence is capable of learning. During an ongoing test, the tool makes improvements and continuously optimises the informative value of the results.

The costs of powerful A/B testing tools that are based on AI are sometimes very high. However, there are cheaper trial versions available for most programmes.

Multivariate testing

Multivariate testing (MVT) is also used especially for websites that have a lot of visitors. However, the test setup here is much more complex. MVT offers the possibility to test different features of a website simultaneously. Not only is the effectiveness of individual elements (headlines, call-to-actions, design etc.) tested, but so are their interrelationships.

An example:

Headline A is (viewed in isolation) more efficient than Headline B.

The call-to-action button A is – also considered in isolation – just as efficient as the call-to-action button B.

However, if Headline B (the weaker Headline) and the Call-to-Action-Button A appear together, the conversion is much better.

With multivariate testing, the best combination of elements can be determined. The result is that the weaker Headline B is stronger than Headline A when used together with the Call-to-Action Button A.

A/B tests for a successful relaunch

Website operators are often faced with the question of which theme to choose before relaunching a web page. This is where A/B tests come handy. With theme-split tests, the website operator can play it safe and decide on the look that best appeals to visitors. There are WordPress plug-ins for this purpose, which make use of Google Analytics, for example.

WordPress is the most popular content management system. It is not only ideal for blogs, but is also successfully used for launching landing pages. With A/B tests, website operators can further increase the conversion of pages created with WordPress. For this purpose, there are many plugins available on the market.

With WordPress plugins for A/B tests, make sure to check which version the tests are compatible with.

WordPress plugins can be used to optimise individual page elements as well as entire web pages.

This is how you reach the readers

Well-written content needs to be read by people. But how do you achieve this goal when the Internet is flooded with so much content, which is presented to users whenever they search on Google for a specific term? The following are the decisive factors:

Because these web page elements are so important, they often become the subject of A/B tests. When formulating headlines, titles and short descriptions, every word counts.

If these three elements are correct, the chance of the user clicking on a page increases. This is the first step. What then counts is the quality of the text. Now the first paragraph decides whether the user will continue reading. The first paragraph, like the elements mentioned above, works as an appetiser.

The following text properties, among others, have proven to be successful stylistic devices in A/B testing:

These are the guidelines that may appear simple at first glance. But when it comes to implementation, many inexperienced writers fall short of following them.

A successful website primarily works psychologically: For example, the visitor must immediately feel addressed. This can only be achieved if the website content speaks the visitor’s language. Finding the right words and using the best tonality isn’t easy, especially for inexperienced writers. This is why you should seek the help of professionals.

Common A/B testing mistakes

Mistakes are often made in A /B testing. However, the source of the error is usually not in the tool itself, but in the application and interpretation of the data. The most common mistakes are:

Conclusions are drawn prematurely from the first results.

Solution: Wait until the amount of data is large enough to show significant results.

The tests do not run 24/7.

Solution: Perform the tests on weekdays. There are significant differences between weekdays, times of day, etc.

A test is carried out randomly.

Solution: Create a hypothesis for testing purposes. Example: addressing your customers directly with ‘you’ is more effective than using a third person tone (or vice versa).

Too many variations are tested at once.

Solution: Choose the number of variations depending on the number of visitors.

Rule of thumb: The more visitors to the website, the more variations can be tested at the same time. With too few visitors, the significance is weak.

A/B tests for ongoing conversion optimisation

A/B tests directly implement valuable findings. This is the reason why A/B tests can be used continuously. A/B tests are already being used in ongoing campaigns all the way to the end of the campaign. The ongoing optimisation looks something like this:

First, two versions are put online. If a version proves to be more effective, it remains online. In addition, a variation of the winning version is created, which in turn is shown to half of the visitors. And so it goes on until there are no significant differences in the conversion.

Fully automated website optimisation? It sounds a bit like a distant dream. But it is highly likely to see websites fully optimise themselves in a few years’ time.

Concrete data for your marketing strategy

The decision for a specific strategy is now less determined by feeling than by concrete data. What is decisive is what the user prefers. A/B tests can be used to find this out. These are applied in real-time – during the runtime of campaigns, content can be continuously optimised. Large websites with millions of visitors in particular use A/B tests to minimise risks. The key advantages of A/B tests are:

As mentioned before, the written word has a huge impact on customer acquisition and conversions. Good quality texts always work effectively. And with A/B testing, the success of content can be measured.

Your A/B tests are planned but you still lack the right content?

Find the right author now.

No comments available